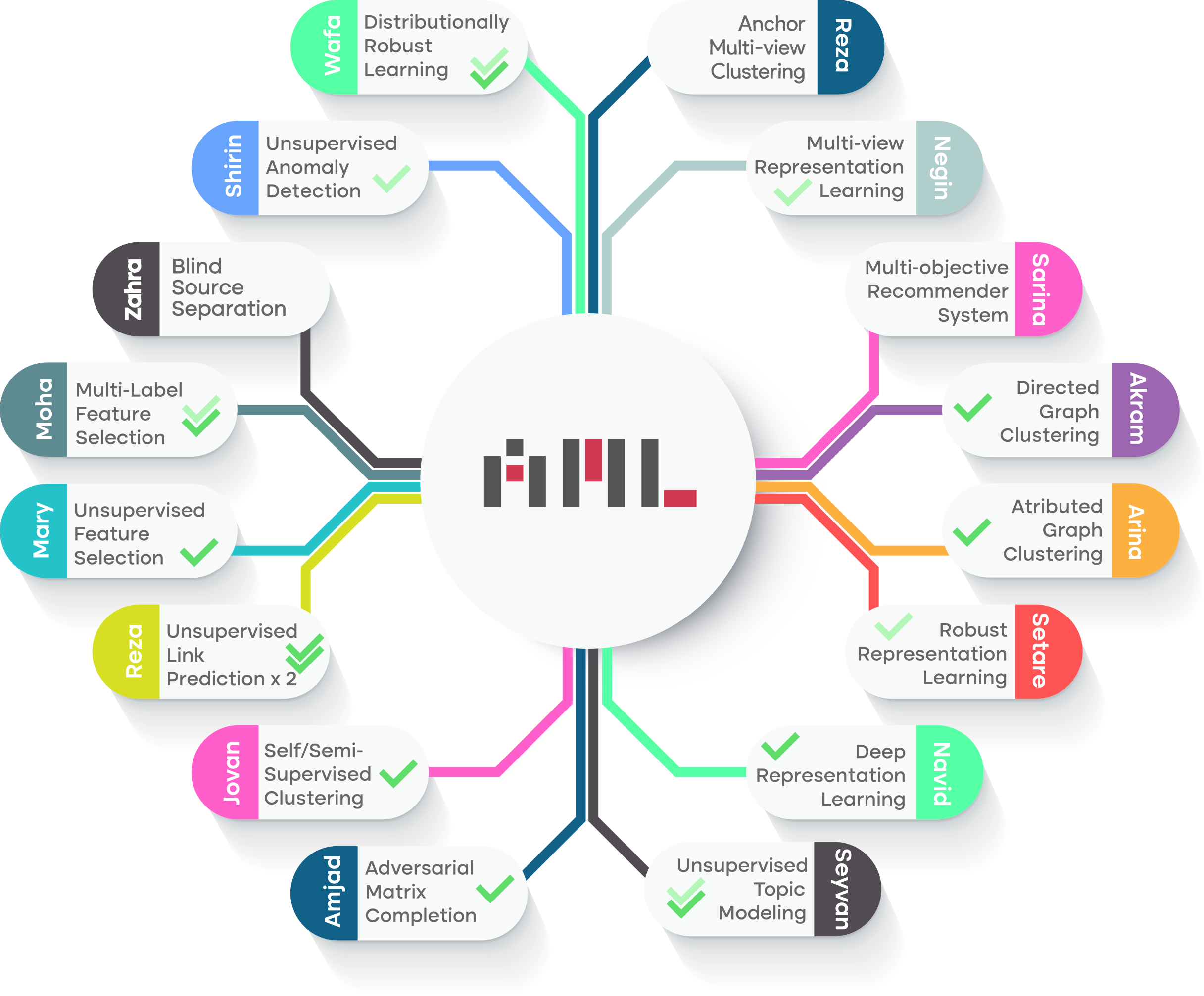

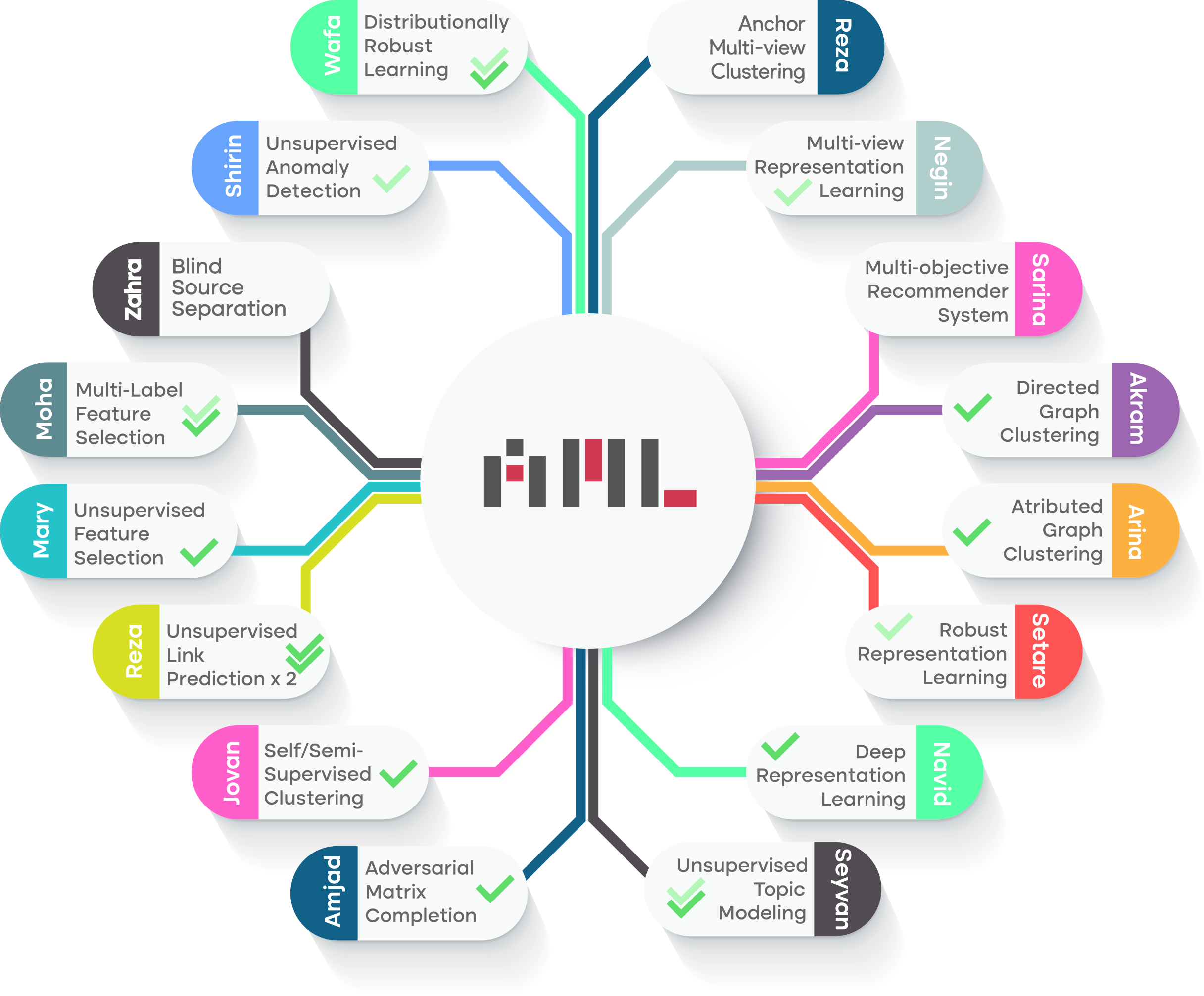

This paper proposes a novel framework, Distributionally Robust Nonnegative Matrix Factorization with Self-Paced Adaptive Multi-Loss Fusion (DRNMF-SP), to enhance robustness against both moderate and extreme outliers across various noise types. DRNMF-SP adopts a multi-objective optimization strategy that integrates multiple loss functions through a weighted sum, reflecting the uncertainty in selecting a single objective. It employs a distributionally robust optimization approach, minimizing the worst-case expected loss over a probabilistic ambiguity set. The integration of self-paced learning allows the model to progressively learn from clean instances while deferring to noisy samples, enhancing robustness to heavy-tailed distributions.

Information Sciences, 2026

This paper proposes a self-representation factorization model for data clustering that incorporates local information into its learning process. The Regularized Encoder-Decoder NMF model based on β divergence (β-REDNMF) integrates encoder and decoder factorizations into a β cost function that mutually verify and refine each other, resulting in the formation of more distinct clusters. To incorporate the local information into the method, we add a graph regularization to the model. The β-REDNMF, owing to its autoencoder-like architecture and utilization of local information, produces more informative word embeddings with generalization abilities that apply to various data types. We present an efficient and effective optimization algorithm based on multiplicative update rules to solve the proposed unified model.

Pattern Recognition, 2026

This paper proposes a new robust model, called instance-wise distributionally robust NMF (iDRNMF), that can handle a wide range of noise distributions. By leveraging a weighted sum multi-objective method, iDRNMF can handle multiple noise distributions and their combinations. Furthermore, while the entry-wise models assume noise contamination at the individual matrix entries level, the proposed instance-wise model assumes noise contamination at the entire data instances level (columns of the input matrix). This instance-wise model is often more appropriate for data representation tasks, as it addresses the noise affecting entire feature vectors rather than individual features.

Pattern Recognition, 2026

This paper proposes a self-representation factorization model for text clustering that incorporates semantic information into its learning process. The Semantic-aware Encoder-Decoder NMF model based on Kullback-Liebler divergence (SEDNMFk), integrates encoder and decoder factorizations into a Kullback-Liebler cost function that mutually verify and refine each other, resulting in the formation of more distinct clusters. To further enhance the semantic properties of the method, we add a tailored semantic regularization to the model. Due to its autoencoder-like architecture, SEDNMFk, and utilization of contextual information, produces more informative word embeddings with generalization abilities that are applicable to out-of-sample data.

International Journal of Machine Learning and Cybernetics, 2025

This paper proposes the Diverse Joint Nonnegative Matrix Tri-Factorization (Div-JNMTF), an embedding based model to detect communities in attributed graphs. The novel JNMTF model attempts to extract two distinct node representations from topological and non-topological data. Simultaneously, a diversity regularization technique utilizing the Hilbert-Schmidt Independence Criterion (HSIC) is employed. Its objective is to reduce redundant information in the node representations while encouraging the distinct contributions of both types of information.

Applied Soft Computing, 2024

This paper proposes a novel Link Prediction using Adversarial Deep NMF (LPADNMF) to enhance the generalization of network reconstruction in sparse graphs. The main contribution is the introduction of an adversarial training that incorporates a bounded attack on the input, leveraging the $\ell_{2,1}$ norm to generate diverse perturbations. This adversarial training aims to improve the model's robustness and prevent overfitting, particularly in scenarios with limited training data.

Engineering Applications of Artificial Intelligence, 2024

This paper proposes the Orthogonal Encoder-Decoder factorization for unsupervised Feature Selection (OEDFS) model, combining the strengths of self-representation and pseudo-supervised approaches. This method draws inspiration from the self-representation properties of autoencoder architectures and leverages encoder and decoder factorizations to simulate a pseudo-supervised feature selection approach. To further enhance the part-based characteristics of factorization, we incorporate orthogonality constraints and local structure preservation restrictions into the objective function.

Information Sciences, 2024

This paper proposes a feature selection model which exploits explicit global and local label correlations to select discriminative features across multiple labels. In addition, by representing the feature matrix and label matrix in a shared latent space, the model aims to capture the underlying correlations between features and labels. The shared representation can reveal common patterns or relationships that exist across multiple labels and features. An objective function involving L2,1-norm regularization is formulated, and an alternating optimization-based iterative algorithm is designed to obtain the sparse coefficients for multi-label feature selection.

Expert Systems with Applications, 2024

This paper proposes a graph-specific Deep NMF model based on the Asymmetric NMF which can handle undirected and directed graphs. Inspired by hierarchical graph clustering and graph summarization approaches, the Deep Asymmetric Nonnegative Matrix Factorization (DAsNMF) is introduced for the directed graph clustering problem. In a pseudo-hierarchical clustering setting, DAsNMF decomposes the input graph to extract low-level to high-level node representations and graph representations (summarized graphs).

Pattern Recognition, 2024

We proposed a novel link prediction method based on adversarial NMF, which reconstructs a sparse network by an efficient adversarial training algorithm. Unlike the conventional NMF methods, our model considers potential test adversaries beyond the pre-defined bounds and provides a robust reconstruction with good generalization power. Besides, to preserve the local structure of a network, we use the common neighbor algorithm to extract the node similarity and apply it to low-dimensional latent representation.

Knowledge-based Systems, 2023

In this paper, we design an effective Self-Supervised Semi-Supervised Nonnegative Matrix Factorization (S4NMF) in a semi-supervised clustering setting. The S4NMF directly extracts a consensus result from ensembled NMFs with similarity and dissimilarity regularizations. In an iterative process, this self-supervisory information will be fed back to the proposed model to boost semi-supervised learning and form more distinct clusters.

Pattern Recognition, 2023

This paper proposes an elastic adversarial training to design a high-capacity Deep Nonnegative Matrix Factorization (DNMF) model with proper discovery latent structure of the data and enhanced generalization abilities. In other words, we address the challenges mentioned above by perturbing the inputs in DNMF with an elastic loss which is intercalated and adapted between Frobenius and L2,1 norms. This model not only dispenses with adversarial DNMF generation but also is robust towards a mixture of multiple attacks to attain improved accuracy.

Information Sciences, 2023

This paper proposes the Deep Autoencoder-like NMF with Contrastive Regularization and Feature Relationship preservation (DANMF-CRFR) to address the data representation challenges. Inspired by contrastive learning, this deep model is able to learn discriminative and instructive deep features while adequately enforcing the local and global structures of the data to its decoder and encoder components. Meanwhile, DANMF-CRFR also imposes feature correlations on the basis matrices during feature learning to improve part-based learning capabilities.

Expert Systems with Applications, 2023

Self-Paced Multi-Label Learning with Diversity

In this paper, we propose a self-paced multi-label learning with diversity (SPMLD) which aims to cover diverse labels with respect to its learning pace. In addition, the proposed framework is applied to an efficient correlation-based multi-label method. The non-convex objective function is optimized by an extension of the block coordinate descent algorithm. Empirical evaluations on real-world datasets with different dimensions of features and labels imply the effectiveness of the proposed predictive model.

Asian Conference on Machine Learning, 2019

A weakly-Supervised Factorization Method with Dynamic Graph Embedding

In this paper, a dynamic weakly supervised factorization is proposed to learn a classifier using NMF framework and partially supervised data. Also, a label propagation mechanism is used to initialize the label matrix factor of NMF. Besides a graph based method is used to dynamically update the partially labeled data in each iteration. This mechanism leads to enriching the supervised information in each iteration and consequently improves the classification performance.

Artificial Intelligence and Signal Processing Conference (AISP), 2017